[ad_1]

Why use o3-pro?

In contrast to general-purpose fashions like GPT-4o that prioritize pace, broad information, and making customers be ok with themselves, o3-pro makes use of a chain-of-thought simulated reasoning course of to dedicate extra output tokens towards working via advanced issues, making it typically higher for technical challenges that require deeper evaluation. However it’s nonetheless not good.

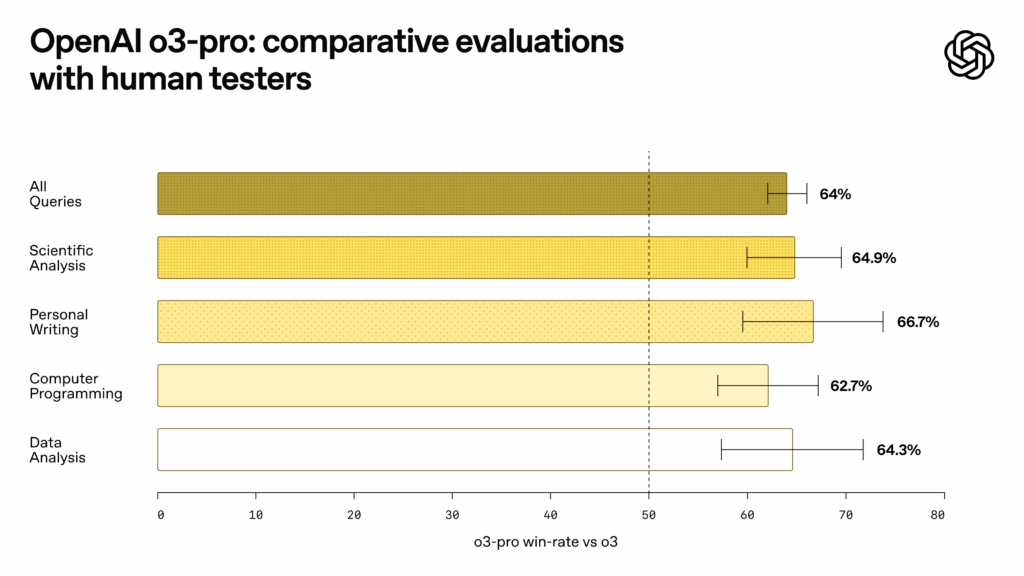

Measuring so-called “reasoning” functionality is hard since benchmarks might be simple to sport by cherry-picking or coaching knowledge contamination, however OpenAI reviews that o3-pro is common amongst testers, no less than. “In knowledgeable evaluations, reviewers persistently desire o3-pro over o3 in each examined class and particularly in key domains like science, schooling, programming, enterprise, and writing assist,” writes OpenAI in its launch notes. “Reviewers additionally rated o3-pro persistently greater for readability, comprehensiveness, instruction-following, and accuracy.”

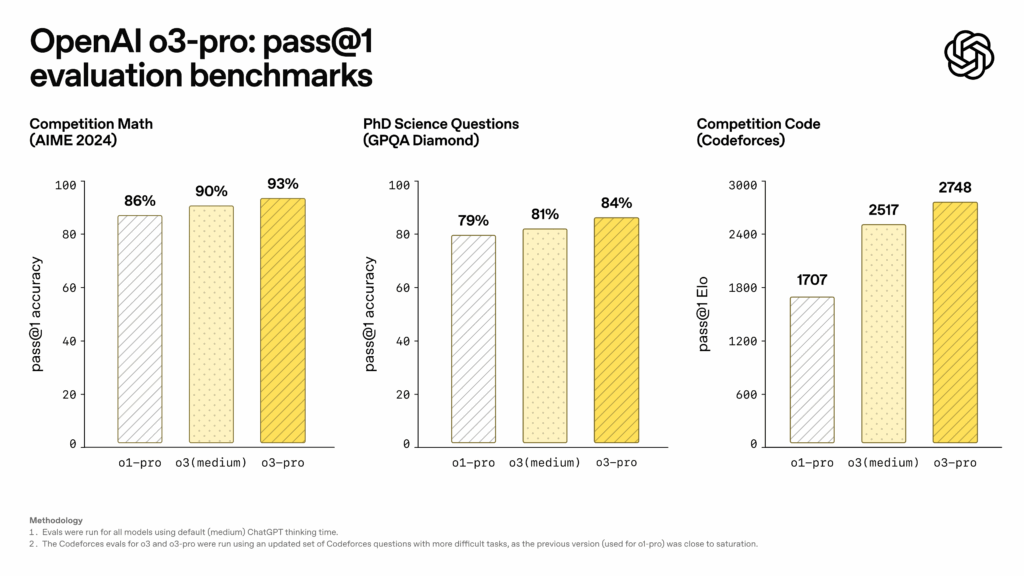

OpenAI shared benchmark outcomes exhibiting o3-pro’s reported efficiency enhancements. On the AIME 2024 arithmetic competitors, o3-pro achieved 93 p.c go@1 accuracy, in comparison with 90 p.c for o3 (medium) and 86 p.c for o1-pro. The mannequin reached 84 p.c on PhD-level science questions from GPQA Diamond, up from 81 p.c for o3 (medium) and 79 p.c for o1-pro. For programming duties measured by Codeforces, o3-pro achieved an Elo ranking of 2748, surpassing o3 (medium) at 2517 and o1-pro at 1707.

When reasoning is simulated

It is easy for laypeople to be thrown off by the anthropomorphic claims of “reasoning” in AI fashions. On this case, as with the borrowed anthropomorphic time period “hallucinations,” “reasoning” has change into a time period of artwork within the AI business that principally means “devoting extra compute time to fixing an issue.” It doesn’t essentially imply the AI fashions systematically apply logic or possess the power to assemble options to actually novel issues. This is the reason Ars Technica continues to make use of the time period “simulated reasoning” (SR) to explain these fashions. They’re simulating a human-style reasoning course of that doesn’t essentially produce the identical outcomes as human reasoning when confronted with novel challenges.

[ad_2]